Fastest possible text updates with or without React

Michael

Electric UI is a hardware focused user interface framework, built around a concept of messageIDs that represent specific variables in hardware that change. Often these variables can be represented with text, the component that achieves this is called the Printer.

In this article we'll optimise the Printer component to be significantly faster than its naive implementation.

All flame graphs were captured on a 2018 Mac Mini at 6x CPU slowdown. The Mac has a i7-8700B, I'm certain there are computers out there still kicking that have a worse CPU than what's represented by this combination.

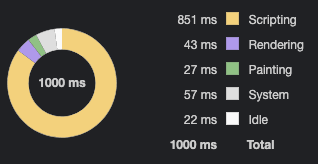

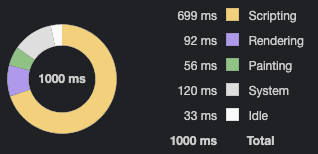

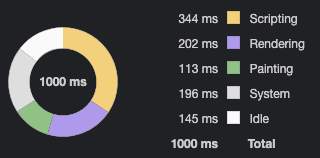

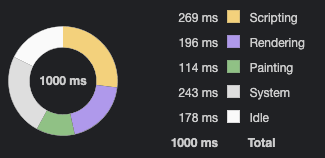

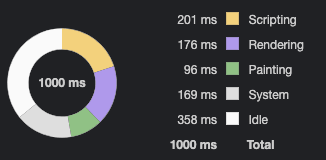

Scripting time over a second of updates will be used as our benchmark, since the actual render times are quite noisy. Render time and FPS numbers will be thrown in for context, but the end goal is to reduce that scripting time.

React developer mode was also used for all flame graphs unless otherwise specified. While production mode has significant performance gains, the apps still have to be written in developer mode. If there's poor performance in this mode, the developer suffers. Our end customers are these developers, so we have to keep them happy. Plus, I'm a developer, and I prefer being happy [citation needed].

Not batching

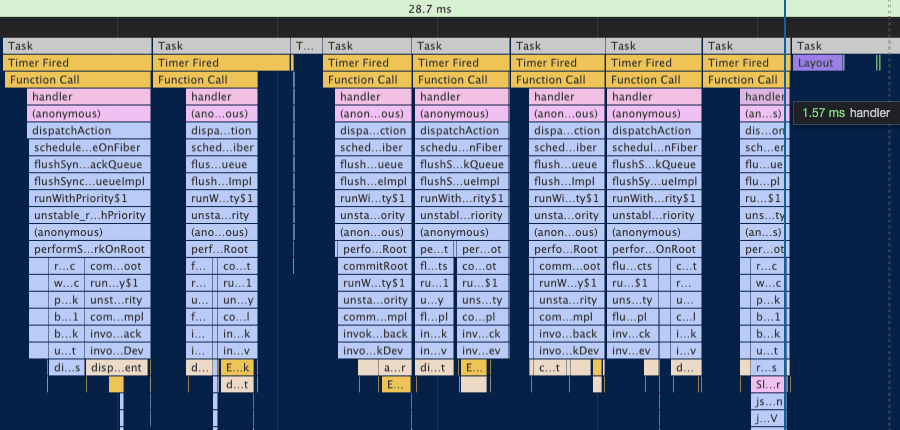

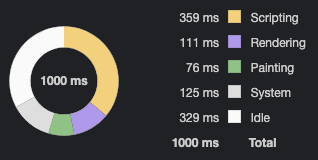

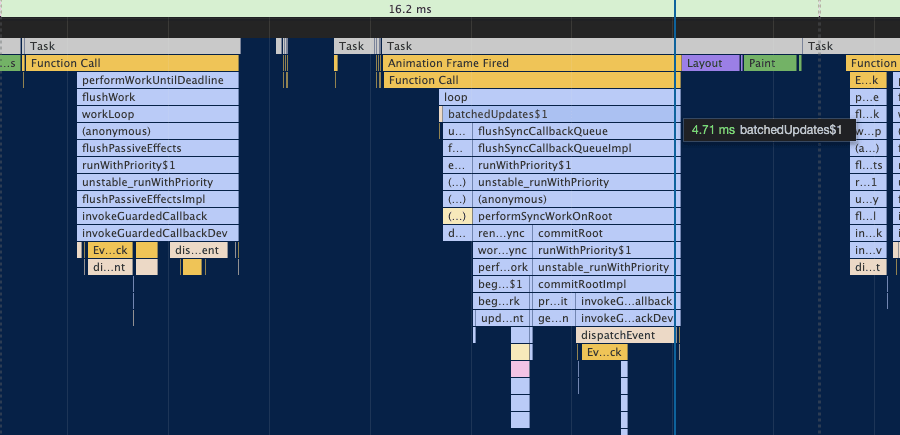

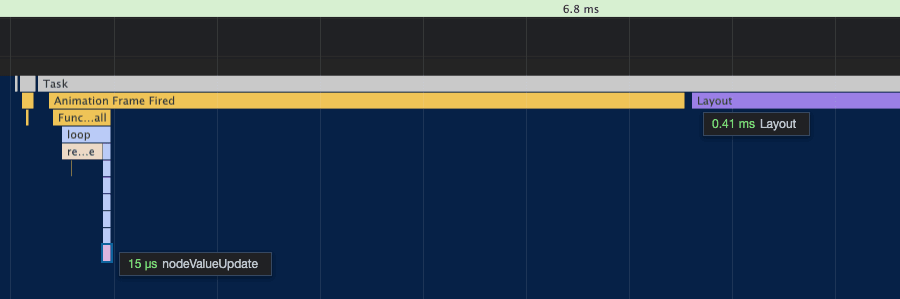

Below is a flame graph of 7 printers in their most naive implementation, simply triggering a React re-render when they receive data.

Each React render takes ~1.5-4ms, roughly 2ms on average, resulting in roughly 30-40fps.

The component looks like this:

function SlowReactPrinter(props: SlowReactPrinterProps) { const [state, setState] = useState(false) useUpdate((newState: boolean) => { setState(newState) }) return <span>{state ? 1 : 0}</span>}The useUpdate hook can be replaced with any externally triggered update mechanism. It can be a timer or a subscription to a Redux store, the implementation details don't matter. For these flame graphs updates are pushed at the refresh rate of the monitor.

Needless to say, 40fps to update some text labels isn't great. Every time a garbage collection comes along, the app stutters. In general it doesn't feel good.

React Profiler extension

A quick note on the React Profiler extension - I don't think it's useful for this kind of performance optimisation. While none of its information is incorrect, it's not helpful in improving the situation. It identifies that the thing that's updating is the span in the component, but doesn't help to identify the next steps. It's technically correct that the actual text updates are very quick, but looking at this screenshot you would think that performance is 'just fine' when in reality, the app is pinning the JS thread at 85% just to render 7 text labels.

Personally I never use it and always profile the interaction with the Chrome performance tab.

If I could offer some constructive criticism, I would recommend grouping the right hand render list into frames with some kind of visual indicator to make it clear when renders could be batched.

React Production Mode

Switching on production mode offers great performance benefits. Even this naive implementation results in 144fps updates. Again, this doesn't help our specific situation of improving the developer experience, but it's good to keep in mind.

Interestingly, more time is spent rendering because more frames are being rendered.

Batching

The majority of the cost of the naive method was React's systematic render cost. Diffing the virtual DOM isn't free, especially compared to the cost of updating the text itself.

The DOM doesn't need to be updated more than once a frame, since paints only happen once per frame. By batching these updates, this systematic cost can be paid once per frame instead of once per update.

I would recommend the rafz package from the wonderful Poimandres OSS developer collective for all your frame batching needs. The library is tiny, effective and a pleasure to use.

https://github.com/pmndrs/rafz

It's trivial to hook it up to React's batching mechanism:

import { raf } from 'rafz'import { unstable_batchedUpdates } from 'react-dom'raf.batchedUpdates = unstable_batchedUpdatesWhen testing your code, I recommend using setImmediate as a 'polyfill' to have renders happen immediately:

import { raf } from 'rafz'raf.use(setImmediate)Electric UI handles frame batching by building a map of changes and flushing them per frame. MessageIDs can change multiple times per frame, and only the latest one needs to be rendered.

Our toy example can be batched by wrapping the setState call in a function that's passed to rafz.

import { raf } from 'rafz'import { unstable_batchedUpdates } from 'react-dom'raf.batchedUpdates = unstable_batchedUpdatesfunction SixtyFPSReactPrinter(props: SlowReactPrinterProps) { const [state, setState] = useState(false) useUpdate((newState: boolean) => { raf.write(() => { setState(newState) }) }) return <span>{state ? 1 : 0}</span>}By batching these updates, no matter how many components are rendered or how many updates happen per frame, React will only do a render once per frame.

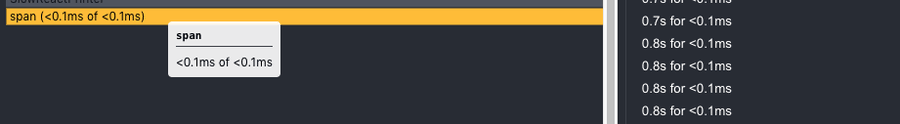

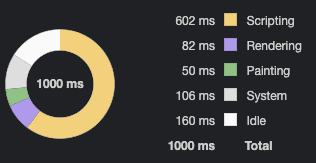

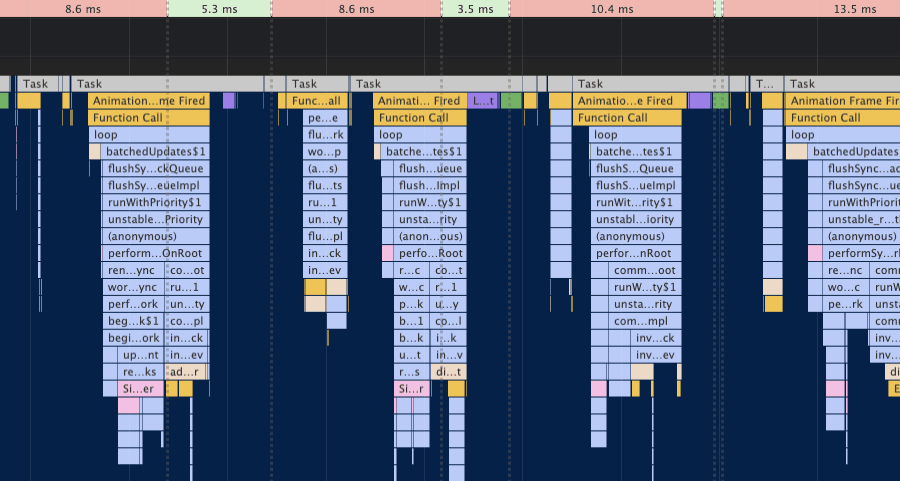

Each React render now takes about 5ms. Render time is now ~16ms, which hits that magical 60fps mark.

However, I have a 144hz monitor, and even phones often have high refresh rate displays these days. What happens when we switch from a 60hz display to a 144hz display and begin pushing updates at this higher rate?

Unfortunately we're dropping frames and only hitting about 80fps.

Update the textContent directly

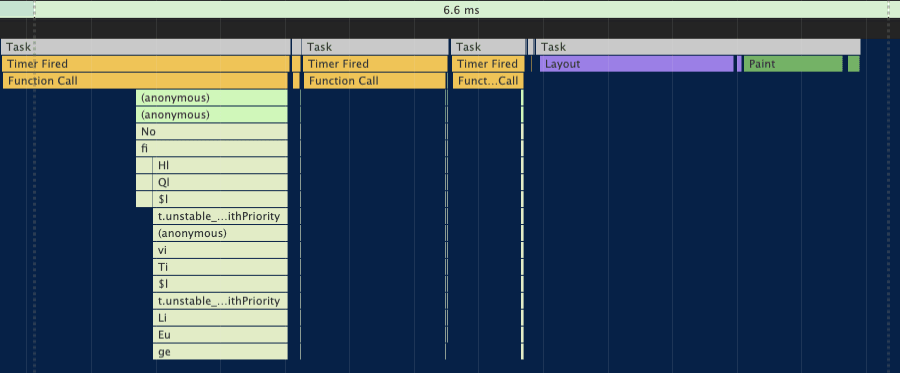

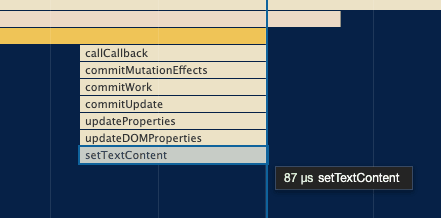

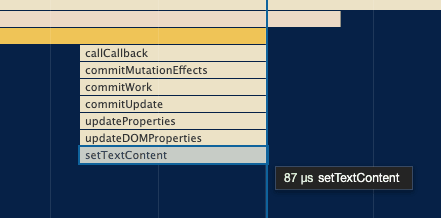

If we zoom in on the flame graph on the "actual work" being done, it's taking 87 μs to update a text label. Sometimes this function call doesn't display, which means sometimes it takes so little time the profiler doesn't bother including it.

React's reconciliation step is useful when you don't know what exactly updated. However, Electric UI's event driven architecture can give us 'truly reactive' updates if we want them, giving us exactly the updated information and nothing more.

Many apps have the potential to be updated this way. Redux stores can be subscribed to with selectors that trigger callbacks only when the relevant state is updated. This allows for an opt-in fast-path to frequent updates.

Since we can be notified of updates, we can imperatively update the DOM ourselves, avoiding React's reconciliation step after the initial components are rendered.

React offers an escape hatch for interacting directly with DOM elements with the ref API.

function FasterReactPrinter(props: SlowReactPrinterProps) { const ref = useRef<HTMLSpanElement>(null) const initialState = useUpdate((newState: boolean) => { // the name is purely for better visibility in the flame graph raf.write(function textContentUpdate() { if (ref.current) { ref.current.textContent = String(newState ? 1 : 0) } }) }) return <span ref={ref}>{initialState ? 1 : 0}</span>}The span provides a mutable reference that can be used for imperative updates when they come in. The initial state can be pulled from the useUpdate hook when React initially renders the component.

If your app uses a Redux store or similar API, in the initial render the store can be read imperatively then subscribed to for future updates.

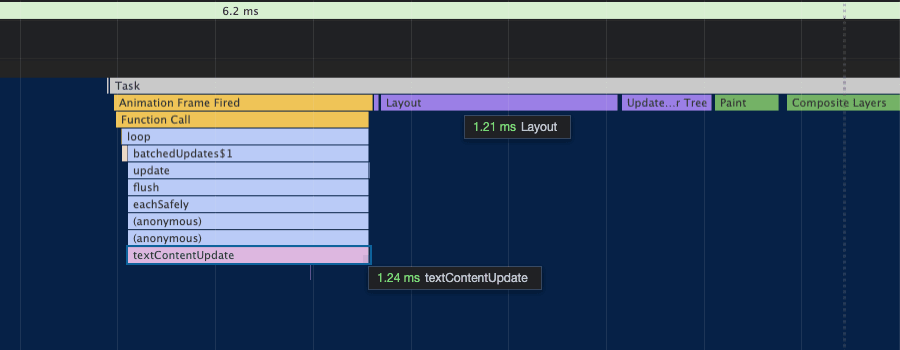

The renders are still batched, since there's no use doing them more than once a frame.

These text updates are now taking 1ms or less, at 'over' 144fps. At this stage the text updates are pretty close to the noise floor. Some take microseconds, others like this one take a little longer. The exact values don't matter, just that our overall scripting time is going down. Again, we're rendering more frames, so the rendering time has increased.

The scripting time is now below that of React Production mode!

When I originally went through this process, updating the textContent property of the span node caused excessive re-layouts. In this toy example it's not a problem, but in the real app my next step was to take a look at the W3 spec to try and figure out why the layouts were thrashing.

Jumping to the relevant section in the W3 spec:

https://www.w3.org/TR/2004/REC-DOM-Level-3-Core-20040407/core.html#Node3-textContent

On setting, any possible children this node may have are removed and, if it the new string is not empty or

null, replaced by a singleTextnode containing the string this attribute is set to.

The replacement of that Text node will be slower than merely updating the text inside it. It can also cause a re-layout in some circumstances.

Build our own Text node and update its nodeValue

Instead of replacing the Text node each update, we can create one, keep it around, and imperatively update it.

React doesn't offer the ability to attach a ref to a Text node. Instead we can attach a ref to the span and build our own Text node, taking responsibility for its lifecycle.

function FastReactPrinter(props: SlowReactPrinterProps) { const spanRef = useRef<HTMLSpanElement>(null) const textRef = useRef<Text | null>(null) const initialState = useUpdate((newState: boolean) => { // the name is purely for better visibility in the flame graph raf.write(function nodeValueUpdate() { if (textRef.current) { textRef.current.nodeValue = String(newState ? 1 : 0) } }) }) // Create a bespoke text node and attach it inside the span ref useLayoutEffect(() => { const textNode = document.createTextNode(String(initialState ? 1 : 0)) // The spanRef will have been created by now const currentSpanRef = spanRef.current! // Append the textNode to the span. currentSpanRef.appendChild(textNode) // Update our ref of the textNode textRef.current = textNode // When the component unmounts, remove the child first return () => { // Use a closure to store the currentSpanRef. currentSpanRef.removeChild(textNode) } }, []) return <span ref={spanRef} />}Using the useLayoutEffect hook, we synchronously mutate the DOM, adding our Text node, right after React does its own pass. We append it as a child to the span, and store a reference to it in the textRef ref.

When our updates occur, we modify the nodeValue of the Text node itself.

The exact numbers from the flame graph are again so close to the noise floor that they don't really matter.

This step didn't reduce the layout time as significantly as I would have thought, but the aggregate time from our sample has reduced again. We're beating React Production mode by even more!

What React was doing under the hood

Looking back to our original flame graph, we can dive into React's source code and take a look at how setTextContent works.

React's codebase might seem a little scary at first, but there's nothing like small bits of exposure to make it not seem as daunting.

/** * Set the textContent property of a node. For text updates, it's faster * to set the `nodeValue` of the Text node directly instead of using * `.textContent` which will remove the existing node and create a new one. * * @param {DOMElement} node * @param {string} text * @internal */const setTextContent = function(node: Element, text: string): void { if (text) { const firstChild = node.firstChild if ( firstChild && firstChild === node.lastChild && firstChild.nodeType === TEXT_NODE ) { firstChild.nodeValue = text return } } node.textContent = text}The function does the same thing as our final imperative version. We even find a comment that matches the W3 spec!

Summary of performance

React isn't doing anything wrong, it's just doing more than it needs to for this specific use case. Since we know exactly what is updating, we can imperatively handle it without needing to invoke React's reconciler.

Building the final application with React production mode on, we have even better performance!

Our final implementation is roughly 3x more performant than the naive implementation. This figure is also quite conservative, since we're hitting higher FPS numbers, rendering more frames, with less work.

Where the naive full update took around 15ms, the final version does it in 15 μs, roughly three orders of magnitude improvement. Turns out if you do less, it's faster!

| Implementation | Scripting time | Improvement factor |

|---|---|---|

| Naive | 851ms | 1x |

| Batched 60hz | 602ms | 1.41x |

| Batched 144hz | 699ms | 1.22x |

| textContent | 344ms | 2.47x |

| nodeValue | 269ms | 3.16x |

We can now hit 144hz comfortably in developer mode on our simulated 'slow' development machine!

The performance gains are reflected to a lesser extent in production mode as well.

| Implementation | Scripting time | Improvement fact |

|---|---|---|

| Production React + Naive | 359ms | 1x |

| Production React + nodeValue | 201ms | 1.78x |

Why do we use React at all if we're just going imperatively update

React has so many wins in so many places that needing to do a little bit of optimisation work infrequently doesn't nearly tip the balance of compromise away from React. The component based model means we can make these changes in a singular place and find the benefits across the entire app.

React is relatively unique amongst frontend libraries in that it doesn't make assumptions about the environment in which it's rendering. Other renderers for command line applications, mobile phones and ThreeJS not only exist, but are widely used. It might sound preachy, but I think React is better thought of a JSX based component design model rather than a frontend framework.

As an example, our charts are written as a containerised react-three-fiber component which transparently switches out the renderer from the DOM to a WebGL canvas. The ability to do that while presenting a uniform API to our users is pretty magical.

Going through a reconciliation step with a virtual DOM has an inherent systematic cost. That cost isn't a big deal for the majority of use cases. It's perfectly fine to take 50ms to change a page worth of content, however it's less acceptable to take 5ms to change a few text labels. With the above methods however, we can get the best of both worlds!