Using Electron with Yarn PnP

Michael

If you are familiar with Yarn v2 you can skip to our experience migrating here. If you want to see benchmark results you can skip to here.

Background

Our command line distribution tool, arc, lets our users install an Electric UI template project in a single command, much like create-react-app.

Unlike create-react-app, we have a series of native dependencies as well as a dependency on Electron. Systems like prebuild provide a reasonable user experience in automatically downloading pre-compiled versions of these native dependencies. Electron provides its own post-install script for installing the 'native' part of itself.

The previous iteration of our templates had some annoyances:

- It took a long time to fetch, link and delete templates given the node_modules folder.

- Github is not a CDN, prebuild and Electron download speeds could sometimes drop to the tens of kilobytes per second.

- Prebuilds are only generated when the packages change, not when Electron releases a new version. We wanted to stay more up to date with Electron releases.

- Yarn.lock files aren't portable between operating systems, but distributing a template without a yarn.lock inevitably results in unexpected errors in patch and minor releases breaking our template.

- People don't like seeing a node_modules folder with lots of files.

- The entire install process took too long.

Early solution

Early on the installation of Electron and native dependencies was taken over completely by arc.

Our build system would build the matrix of operating systems, supported native modules and supported Electron target versions. Each of these versions would be uploaded to our registry. Supported Electron target binaries were also uploaded.

Upon installation, arc would run the normal Yarn installation process with build scripts explicitly disabled, then fetch the Electron binaries and prebuilds.

On any change in the matrix, our build system would build the new missing combinations, allowing us to run the latest Electron even if the native modules we depended on hadn't received an update since the Electron release.

Hosting these files on our infrastructure guaranteed a consistent download experience.

Our problem with node_modules

A brief, simplified refresher of the node_modules resolution system:

When a module is required inside a Node project, the node_modules folder is searched for this dependency, if it's not found, the walker jumps up a directory and searches for a node_modules folder there with this dependency, continuing up all the way to the root. This algorithm allows for a reasonable package resolution system, albeit with a couple of problems.

It's completely disk based. While these resolutions are cached, Node will on boot be read thousands of files and folders trying to resolve all dependencies. While this isn't a problem in production, since Electric UI bundles all dependencies into a single file, during development the startup time is harmed.

It's possible to hoist common dependencies up a level to deduplicate files, it is also possible to be unable to perfectly hoist dependencies (at potentially different versions), resulting in duplicated files.

Copying, deleting and generally doing anything with this file structure takes an unexpectedly large amount of time, even with excellent solid state drives. The experience is awful on hard disks. Windows suffers even more, especially so with deletion of templates.

Generating this directory structure, either through copying or linking of files takes a huge amount of time, and it's completely IO bound.

The node_modules folder has a marketing problem as a result of these real problems. Javascript starts off on the wrong foot, especially with performance, and these symptoms don't help its case at all.

Yarn v2

Yarn v2 offered several improvements over Yarn v1. Most notable is its PnP system.

PnP overrides the node_modules resolution algorithm completely, starting with the on-disk storage of modules.

Instead of storing un-tarred copies, PnP stores zipped copies of the dependency at the maximum compression level (files are stored uncompressed in the archive if libzip thinks it will lead to a more sensible result).

All resolutions are pre-computed and placed in a .pnp.js file. This is loaded into memory, storing all package resolutions in essentially a hash map. Upon resolution, the package location is simply looked up, no extraneous readdir or stat calls required.

The internal structure of the .pnp.js file looks like this if you are interested, but isn't the kind of thing you want to be parsing yourself.

["@electricui/protocol-binary", [ ["npm:0.7.14", { "packageLocation": "./.yarn/cache/@electricui-protocol-binary-npm-0.7.14-69473c714e-1386b4e0d8.zip/node_modules/@electricui/protocol-binary/", "packageDependencies": [ ["@electricui/protocol-binary", "npm:0.7.14"], ["@electricui/core", "npm:0.7.14"], ["@electricui/protocol-binary-constants", "npm:0.7.10"], ["@electricui/utility-crc16", "npm:0.7.14"], ["debug", "npm:4.1.1"] ], "linkType": "HARD", }]]],Reading the package is done with libzip compiled to WASM, with near native performance.

This solution swaps the on-disk linking process with the generation of the .pnp.js file which is significantly faster. The IO usage is significantly reduced. The marketing problem is also solved, the node_modules folder is completely gone!

Our only problems with PnP right now stem from slow adoption in the greater community.

VSCode currently doesn't support opening virtualised file systems like the one provided by PnP. However there is progress being made in supporting this, you can follow along here. This problem manifests itself as preventing the user from clicking on the dependency source in VSCode.

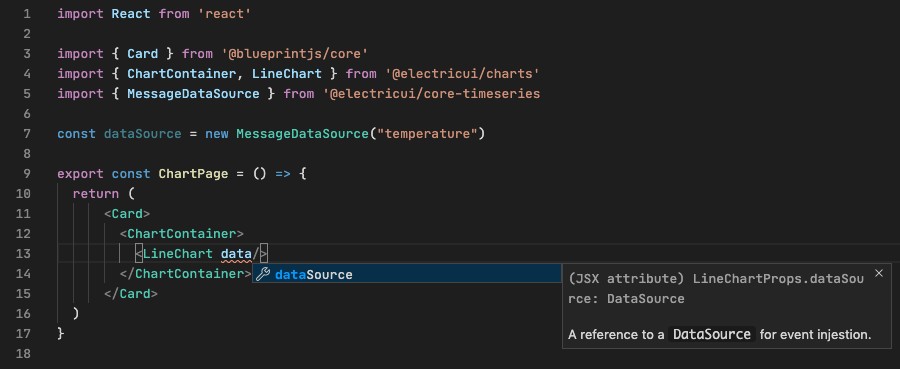

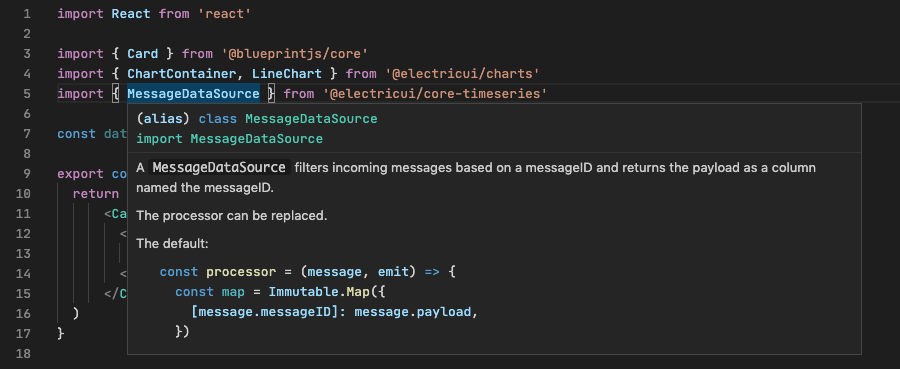

While autocomplete works:

As does JSDoc style information:

Trying to view the source of any packages results in an error that looks like:

Unable to open 'index.d.ts': Unable to read file '~/project/.yarn/$$virtual/@electricui-core-timeseries-virtual-ae5f806fa2/0/cache/@electricui-core-timeseries-npm-0.7.14-e1d92310e6-e368554801.zip/node_modules/@electricui/core-timeseries/lib/index.d.ts' (Error: Unable to resolve non-existing file '~/project/.yarn/$$virtual/@electricui-core-timeseries-virtual-ae5f806fa2/0/cache/@electricui-core-timeseries-npm-0.7.14-e1d92310e6-e368554801.zip/node_modules/@electricui/core-timeseries/lib/index.d.ts').While this is inconvenient, it isn't a deal breaker assuming it is resolved soon.

Yarn v2 requires packages to declare their dependencies explicitly and will error if a package does not. Some packages that are developed in mono-repos make assumptions about the dependency tree without explicitly doing this declaration.

Yarn v2 offers the ability to 'patch' these with the packageExtensions configuration option so it's not that big of a deal. We've been opened about a dozen PRs since adopting Yarn v2 fixing these problems upstream.

Our migration to Yarn v2

Peer Dependencies

Electric UI also runs a mono-repo development environment. Many of our packages have peer dependencies on things like Electron, React and Redux. We were also guilty of not explicitly defining this chain of dependencies.

As a more concrete example:

utility-electronrequires a peerDependency ofelectron.components-desktoprequiresutility-electron, but didn't have a peerDependency ofelectronset.components-desktop-blueprintrequirescomponents-desktop, but again didn't set the peerDependency.

This worked in our non PnP environments since the template always contained the peer dependency, and didn't work in PnP due to these missing links.

Yarn was pretty helpful in its error messages and recently got much better. This took maybe an hour to fix across all our packages.

Don't assume node_modules exists

Our command line tool, arc had to be refactored so that the native dependency installation did not assume packages lived in node_modules/<dep>.

Yarn Workspaces

We had been running a legacy version of lerna without yarn workspaces for quite a while. Annoyingly we would have to run lerna bootstrap instead of yarn install with dependencies or Yarn would complain that our local packages didn't exist.

Quite a while ago Yarn developed workspaces to make it easier to deal with monorepos. We figured it would be a good intermediate step to take on the journey to Yarn v2. Adopting this took much less time than expected, we should have done so sooner.

Synchronous WebAssembly instantiation crashes

Initially we were considering running the Yarn runtime inside Electron. We ended up not going down this route, but it exposed an interesting problem.

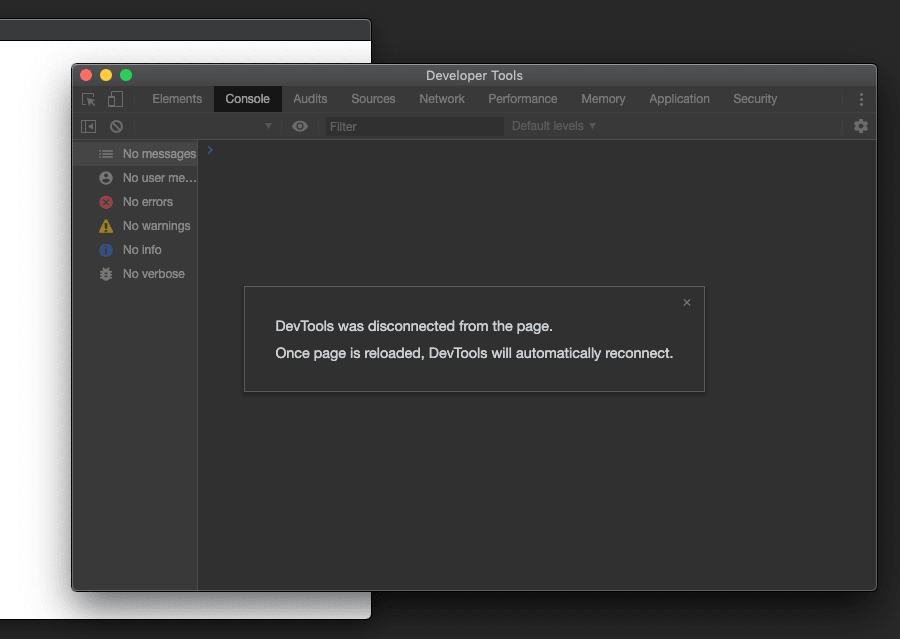

Electron v7 and v8 would actually crash when booted with Yarn PnP, with no logging, simply a blank screen with a message that the DevTools had been disconnected.

After some digging, and the discovery of the ELECTRON_ENABLE_LOGGING environment variable (that prints all of Chromium's internal logging to console, letting you catch error messages before the window actually connects to the dev tools), it was discovered that the crash was called by a synchronous instantiation of the libzip WASM library that was implemented in Yarn PnP.

While Node and v8 in general has no problem with synchronous instantiation of WASM libraries, Chromium imposes a 4KB hard limit on synchronous instantiation.

Electron combines both a Node environment and the regular v8 context provided by Chromium, this is just one of the nuances where Electron acts more like the web browser than the Node server runtime.

It turned out there was nothing PnP specific here, these versions of Electron simply crashed when given a synchronous instantiation of a WASM module over that 4KB limit. There's an issue tracking the error here, though given that it errors without a crash just fine in Electron v9 it's probably not worth poking.

We decided having PnP run inside Electron wasn't actually a goal. We run a webpack-dev-server with fast-refresh that pipes its bundle at Electron anyway. Electron has no requirement to understand or interact with the PnP system.

We gain the performance benefits of PnP in production by bundling everything. Using PnP on the webpack-dev-server end gives us similar performance benefits during development.

Native module resolution, the bindings package and prebuilds

Node uses a different codepath to read native modules from the file system that's incompatible with Yarn PnPs virtualised zip filesystem.

Yarn automatically 'unplugs' native modules, leaving regular files on the file system that can be accessed by Node.

In my testing I found the bindings package had trouble locating these files despite them being on the file system.

It does this at runtime, but ideally this should be done at compile time. I'll coalesce my thoughts on this into a blog post at a later date.

Our solution was to write a Yarn plugin to replace the bindings package with an installation-time static require call to the correct file. It intercepts any packages that have a dependency on bindings and replaces it's copy of bindings with generated JavaScript file that looks like this:

// Bindings taken from node_modules/@prebuilds/@serialport-bindings-v9.0.0-darwin-x64-electron-76/Release/bindings.nodeconst staticRequire = require("./bindings.node");module.exports = (fileLookingFor) => { return staticRequire;};It also downloads the relevant bindings file from the prebuild mirror and places it in the expected location.

This removes the need for Webpack to have these dependencies marked as 'external', allowing for better static analysis, tree shaking, etc.

If you use a package like node-addon-loader, Webpack will treat the .node file like any other file asset, hashing it and moving it to where you keep your bundle.

Zero-installs and bulk cache downloads

Zero-installs are another feature of Yarn PnP that we've implemented in our templates. Per OS, a cache containing all dependencies is collected. A three-way diff is performed between them to find common and unique dependencies per OS. Additionally, this gives us a yarn.lock file per OS. The common, windows, linux and darwin caches are then tarred and uploaded to our registry.

Upon installation of a template, we check for this bulk cache download and grab it if possible, before running the regular yarn install to generate the .pnp.js file.

Results

Overall our migration was relatively painless and was completed within a couple of days in total. The ability to write Yarn plugins let us achieve more than we had hoped. By separating out the usage of PnP to the Webpack side of things we avoided all major headaches, but gained all the benefits of the system.

PnP brought our template size down from 686.6 MB (with 38,741 items in the node_modules) to 266.3 MB (with 2,115 items in the .yarn folder).

This compresses down to a 183.0 MB distributable archive for non PnP, and a 146.7 MB archive for PnP. It's also about 5 times faster to unzip the PnP archive.

Cold cache installations took about 2 minutes on my development hardware previously, with hot cache installations taking about ~30 seconds.

With PnP and zero-installs that has been brought down to ~25 seconds for a cold cache installation, and ~10 seconds for a hot cache installation.

Overall we're very pleased with Yarn v2.