Concourse Linux worker with USB for hardware tests

Scott

We use Concourse CI for our CI/CD pipelines. Containerisation is the backbone of Concourse's architectural design; tiny specialised containers handle resource checks, fetch assets and send notifications, while more serious user-defined containers back the build and test environments.

This is a better design choice compared to a more conventional 'run on the host' approach, as the underlying container can be easily swapped out for a given job to let you test or build against different run-time versions, etc.

Unlike what seems to be a larger percentage of Concourse users who use some form of management orchestration (Kubenetes, Ansible, BOSH, etc), we run our system locally as we need to test against local hardware.

This post outlines basic install instructions for a 'typical' Linux Concourse worker, and then show how we setup a second Linux worker which uses the host directly as needed for our tests which need to touch hardware.

Configure a Linux Build worker

Our infrastructure is hosted as a series of LXC containers and QEMU virtual machines running under a Proxmox hypervisor.

This worker is a Debian 11 virtual machine (6 cores, 20GB RAM, 100GB disk), as nested containerisation can be tricky to get running properly inside LXC containers.

A normal worker setup is pretty simple,

# Download Concourseexport CONCOURSE_VERSION=7.6.0wget https://github.com/concourse/concourse/releases/download/v${CONCOURSE_VERSION}/concourse-${CONCOURSE_VERSION}-linux-amd64.tgztar -zxf concourse-${CONCOURSE_VERSION}-linux-amd64.tgzsudo mv concourse /usr/local/concourse# Create the concourse worker directorymkdir /etc/concourse# Copy the Web node's public key, and the worker's private keyscp [email protected]:tsa_host_key.pub /etc/concourse/tsa_host_key.pubscp [email protected]:worker_key /etc/concourse/worker_keyThe configuration is done through environment variables, so I like to put them in a /etc/concourse/worker_environment file which will be referenced in the systemd.service file.

CONCOURSE_WORK_DIR=/etc/concourse/workdirCONCOURSE_TSA_HOST=10.10.1.100:2222CONCOURSE_TSA_PUBLIC_KEY=/etc/concourse/tsa_host_key.pubCONCOURSE_TSA_WORKER_PRIVATE_KEY=/etc/concourse/worker_keyCONCOURSE_RUNTIME=containerdCONCOURSE_CONTAINERD_DNS_SERVER=10.10.1.1The worker's process is managed through a simple systemd service put in your choice of systemd path. I use /etc/systemd/system/concourse-worker.service:

[Unit]Description=Concourse CI worker processAfter=network.target[Service]Type=simpleUser=rootRestart=on-failureEnvironmentFile=/etc/concourse/worker_environmentExecStart=/usr/local/concourse/bin/concourse worker[Install]WantedBy=multi-user.targetThen it's just a matter of enabling the service sudo systemctl enable concourse-worker and starting it sudo systemctl start concourse-worker.

Hopefully everything is OK and the worker will start communicating with the web node, then appear in the worker list:

scott@octagonal ~ $ fly -t eui workersname containers platform tags team state version age worker-debian-vm 33 linux none none running 2.3 23h53mworker-macos-vm.local 0 darwin none none running 2.3 54d worker-windows-vm 0 windows none none running 2.3 21d This is great for most of our workloads, but while passing USB hardware through to the Debian VM with Proxmox is reasonably painless, passing it into the containerd images for test use is somewhat more difficult.

There are people who have played with hardware passthrough for use in Concourse tasks, but I found that this approach didn't meet our requirement for handling hot-plug style events - we use hardware automation to change the downstream device to meet different test requirements which are non-trivial to pass into Concourse.

I quickly found that any hot-plug events broke the workaround, and this included trying to setup a Concourse worker in a privileged LXC container!

Throwing the benefits of containerisation away

Well, for the tasks that need to touch hardware at least...

We'll create another worker VM like before, but we're going to force the houdini runtime on Linux. This is done through environment variables as before, and we'll tag this worker as hardware to restrict which jobs are scheduled to it.

CONCOURSE_TAG='hardware'CONCOURSE_RUNTIME=houdinihoudini is self-described as "the world's worst containerizer", and is what macOS and Windows Concourse workers use as their 'runtime': there's no containerisation occurring, just temporary file structures and some clean up once the jobs finish.

This isn't recommended for production use as it violates Concourse's paradigm, but it's a reasonable compromise for us as:

- We're already running our non-Linux OS's in this manner, and many older CI systems do this by default,

- only running a small subset of tasks which need hardware access, and the CI/CD is exclusively for our own jobs/codebases at this point,

- wrapping it with a VM and constrained pass-through limits the scope for abuse in a more palatable manner than relaxing cgroups and elevating our main Linux worker...

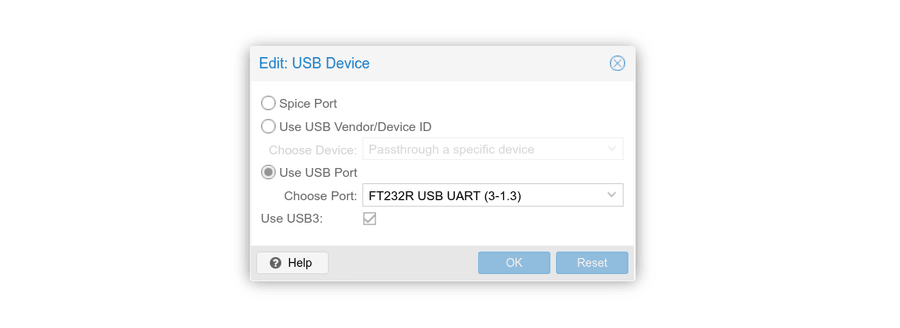

That's it, just pass hardware through to the VM. Proxmox makes this pretty easy - add a device under the hardware tab of the relevant VM.

As the USB port ID 3-1.3 is passed through, not the device VID/PID, it applies to anything connected to that USB port and doesn't break when we hotswap hardware.

Testing Hardware

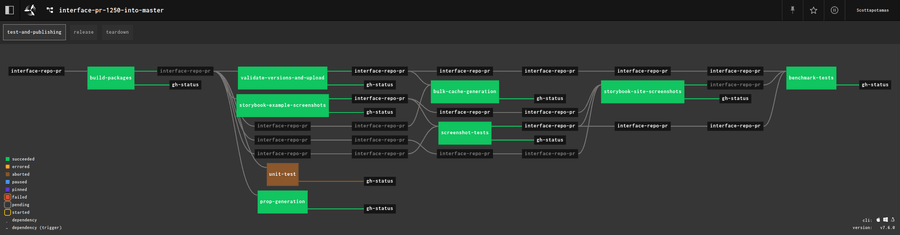

Our main pipeline is spun up by a webhook when a pull-request is made, and runs when commits to that branch are pushed.

The pipeline includes steps for builds, unit tests, documentation, benchmarking runs, and storybook screenshots for our website docs.

The rough skeleton to the hardware testing is pretty straightforward - just ensure tags: [hardware] is used to select the right Linux worker.

macOS and Windows don't need anything special as anything running on them has access to our hardware fixtures passed into the VMs. The yaml looks something like this:

- in_parallel: - task: linux-screenshots tags: [hardware] config: platform: linux inputs: [ ... ] run: path: bash args: # Set the arena to use the AVR target with a ft232 adapter curl -X POST http://10.10.1.175/target -d 5 curl -X POST http://10.10.1.175/adapter -d a # Wait a few seconds, then print lsusb for debug sleep 3 lsusb # Virtual display export DISPLAY=':99.0' Xvfb :99 -screen 0 1920x1080x24 > /dev/null 2>&1 & # Run the screenshot tests yarn run screenshot outputs: - name: screenshots-linux path: ./redacted/test/screenshots - task: darwin-screenshots config: platform: darwin inputs: [ ... ] run: path: bash args: [ ... ] outputs: - name: screenshots-darwin path: ./redacted/test/screenshots - task: windows-screenshots config: platform: windows inputs: [ ... ] run: path: powershell args: [ ... ] outputs: - name: screenshots-windows path: ./redacted/test/screenshots

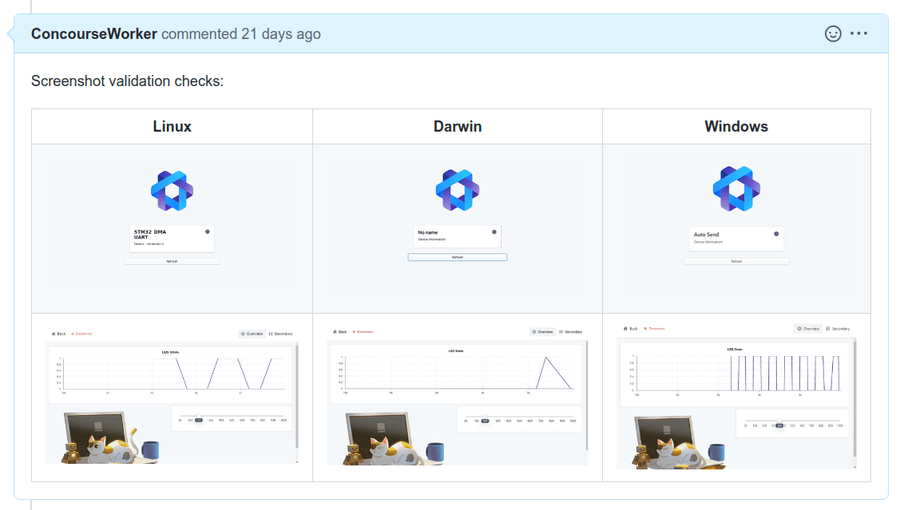

The result is parallel tests for each OS,

- Pulling the latest tooling and builds,

- Boot our template in a sandbox,

- Check that the hardware is found, and shows up on the connections page correctly,

- Connect to the hardware, and take a screenshot of the running UI,

- Upload these screenshots to s3, and then make a GitHub comment on the PR with image embeds.

The comment is formatted with images in a table, making it really obvious if a particular (or all three) platforms have a problem.